jrista said:

Simply proclaiming that you are smarter than everyone else doesn't help the discussion. Its cheap and childish. Why not enlighten us in a way that doesn't require a Ph.D. to understand what you are attempting to explain, so the discussion can continue? I don't know as much as the three of you, however I would like to learn. Hjulenissen and TheSuede are very helpful in their part of the debate...however you, in your more recent posts, have just become snide, snarky and egotistical. Grow up a little and contribute to the discussion, rather than try to shut it down.

I did already. And I was not the first to try to shut it down. OK, last attempt.

The image projected on the sensor is blurred with some kernel (a.k.a. PSF), most often smooth (has many derivatives). One notable exception is motion blur when the kernel can be an arc of a curve - things change there. The blur is

modeled by a convolution. Note: no pixels here. Imagine a sensor painted over with smooth paint.

Take the Fourier transform of the convolution. You get

product of FT's (next paragraph). Now, since the PSF is smooth, say something like Gaussian, its FT decays rapidly. The effect of the blur is to multiply the high frequencies with a function which is very small there. You could just divide to get a reconstruction - right? But you divide something small + noise/errors by something small again. This is the well known small denominator problem, google it. Beyond some frequency, determined by the noise level and by how fast the FT of the PSF decays you have more noise than signal. That's it, basically. The usual techniques basically cut near that frequency in one way or another.

The errors that I mentioned can have many causes. For example, not knowing the exact PSF or errors in its approximation/discretization, even if we somehow knew it. Then usual noise, etc.

This class of problems are known as ill-posed ones. There are people spending their lives and careers on them. There are journals devoted to them. Deconvolution is perhaps the simplest example;

equivalent to the backward solution of the heat equation (for Gaussian kernels).

Here is a reference from a math paper, see the example in the middle of the page. I know the author, he is a very well respected specialist. Do not expect to read more there that I told you because this is such a simple problem that can only serve as an introductory example to the theory.

Again - no need to invoke sensors and pixels at all. They can only make things worse by introducing more errors.

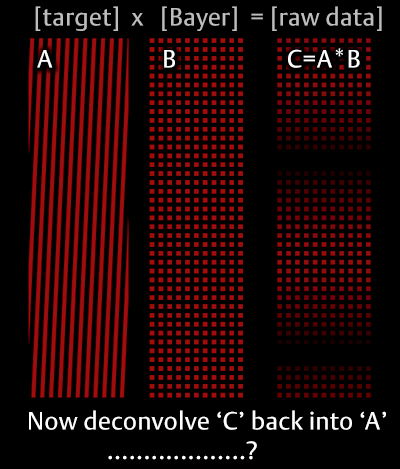

The main mistake so may people here make - they are so deeply "spoiled" by numerics and discrete models that they automatically assume that we have

discrete convolution. Well, we do not. We sample an image which is already convoluted. This makes the problem even more ill posed.