Hi!

I also use the Baader filters http://www.baader-planetarium.de/sektion/s46/s46.htm that can be cut in all sizes for use IN FRONT of eyes, lenses and telescopes...

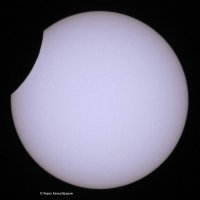

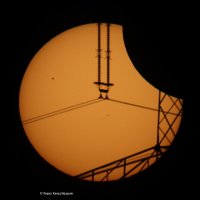

Attached you find some examples of use on

1) EF 100-400 (no sunspots during that eclipse)

2) EF 500/4 (during sunrise with "solarpower" foreground)

3) same eclipse but later with the sun higher up, leading to colour change!

4) 6" telescope showing the now much more active sun a few weeks ago

Always EOS 7D or 5DII...

With such a protection view and makeing pictures are save!!!

Cheers

Franz

I also use the Baader filters http://www.baader-planetarium.de/sektion/s46/s46.htm that can be cut in all sizes for use IN FRONT of eyes, lenses and telescopes...

Attached you find some examples of use on

1) EF 100-400 (no sunspots during that eclipse)

2) EF 500/4 (during sunrise with "solarpower" foreground)

3) same eclipse but later with the sun higher up, leading to colour change!

4) 6" telescope showing the now much more active sun a few weeks ago

Always EOS 7D or 5DII...

With such a protection view and makeing pictures are save!!!

Cheers

Franz

Attachments

Upvote

0