They're not even single color sensing dots. They all three have some sensitivity to the entire visible spectrum. Putting a green color filter over a sensel on a digital sensor does not eliminate all red and blue light from entering it any more than putting a green filter over a lens eliminates all red and green light from reaching black and white film and making any blue or red object in the scene pure black in the photo. It just makes the blue and red things look darker than the green things that are the same brightness in the actual scene.

ALL THREE colors have to be interpolated when demosaicing is done. Not only because of the overlapping way each filtered pixel is sensitive to the rest of the visible spectrum, but also because different light sources emit different parts of the visible spectrum in differing amounts.

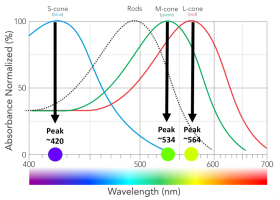

They all have to be interpolated as well because the three colors that most Bayer masks use are not the same three colors that RGB monitors emit, either. The three colors of Bayer mask filters are closer to the three colors to which each type of our retinal cones are most sensitive: A slightly violet blue, a slightly yellow green and a slightly green yellow, though most Bayer masks use and orangish yellow color instead of the lime color to which our L cones are most sensitive.

View attachment 198366

We named the cones "red", "green", and "blue" decades before we managed to measure the exact response of each type of cone to various wavelengths of light.

The reason trichromatic color masks on cameras and trichromatic reproduction systems (monitors or three color printing) work is because our retinas and brains do the same thing. Our Medium wavelength and Long wavelength cones have a very large amount of overlapping sensitivity. Our short wavelength cones have less overlap with the M and L cones, but there is still some overlap there. If there were no overlapping sensitivity between the S, M, and L cones our brains could not create colors. Colors do not exist in the electromagnetic spectrum. There are only wavelengths and frequencies. It's our brains that create color as a response to certain wavelengths/frequencies in the electromagnetic spectrum.

View attachment 198367

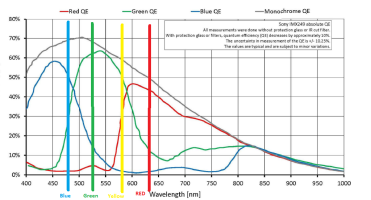

The "red" filtered part of this Sony IMX249 sensor peaks at 600nm, which is what we call "orange", rather than 640nm, which is the color we call "Red."

All of the cute little drawings on the internet notwithstanding, the actual colors of a Bayer filer are not Red (640nm), Green (530nm), and Blue (465nm).

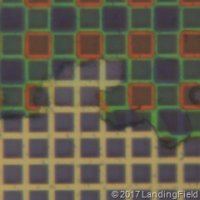

View attachment 198368

Actual image of an actual Bayer mask and a sensor. Part of the mask has been peeled away.