Rather than hijack any of the existing threads on DR, I thought I'd start a new one.

I'm still struggling a little with the concept by which a few people (LetTheRightLensIn and maybe a couple others) are computing Dynamic Range.

Maybe my understanding of DR itself is faulty.

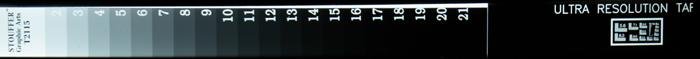

Is not DR the total range between brightest bright detail and darkest dark detail that a camera is capable of recording in a single exposure?

If so, how does having two separate exposures, one completely underexposed (body cap on stopped down fast shutter) and one completely overexposed (bright, slow shutter) aid in computing maximum potential DR?

Wouldn't you instead need a to meter at the median brightness point in a scene containing a fairly slow transition to complete dark and a fairly slow transition to complete bright (i.e. overexposed white and underexposed black existing together in a single exposure with smooth gradients towards the center) in order to determine useful DR?

I have a technical background, but I'm looking more for conceptual understanding than anything.

Thanks!

I'm still struggling a little with the concept by which a few people (LetTheRightLensIn and maybe a couple others) are computing Dynamic Range.

Maybe my understanding of DR itself is faulty.

Is not DR the total range between brightest bright detail and darkest dark detail that a camera is capable of recording in a single exposure?

If so, how does having two separate exposures, one completely underexposed (body cap on stopped down fast shutter) and one completely overexposed (bright, slow shutter) aid in computing maximum potential DR?

Wouldn't you instead need a to meter at the median brightness point in a scene containing a fairly slow transition to complete dark and a fairly slow transition to complete bright (i.e. overexposed white and underexposed black existing together in a single exposure with smooth gradients towards the center) in order to determine useful DR?

I have a technical background, but I'm looking more for conceptual understanding than anything.

Thanks!