It can't meaningfully be done with the equipment we have available because you can't use the same lens to do both at the same time, meaning that there will always be differences in the image created.

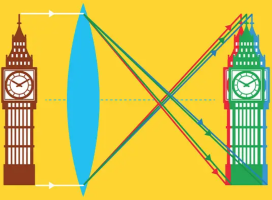

The best you could do would be to take a lens such as this and create a system where you can alter the position of the sensor relative to the back of the lens so that when it is at 50mm, the position of the sensor is further back allowing the spread of light to cover more area. that assumes that the photos coming out the back of the lens are coming out at an angle.

It doesn't have to be the same lens, a similar one would be fine. As I've stated, I compared the RF 14-35/4 to the EF 11-24/4, where the latter at 14mm has very little geometric distortion to start. Corner sharpness of the corrected RF lens at 14mm was similar. That supports the idea that digital correction is non-inferior from an IQ standpoint. What I keep asking is for someone to provide some reasonable evidence to back up the claim that digital correction is inferior.

It would also require custom firmware to record what comes out of the sensor because I think Canon do the stretching very early so you can never see an unstretched image.

Wrong. The distortion correction (stretching) is done during conversion of the RAW image. If you open a RAW image from a lens that doesn't fill the corners and turn off the lens profile, you see the black corners.

This is the corner of a shot with the RF 24-105/2.8 Z taken just after I got the lens, before DxO had a correction profile.

Incidentally, there's may be an easier way to achieve a practically meaningful result – the RF 24-105/2.8 Z doesn't fill the corners at 24mm but does by 28mm (maybe wider, I have not checked). So a comparison of filling the corners with digital extrapolation vs. with light is possible using the same lens (albeit at a different zoom setting, but a close one). I may try that at some point.