The RF 100-500mm f/7.1 and RF 100-400 f.8 both resolve about 25% or so more on the R7 than the R5, and the R7 is equivalent to an 82 Mpx FF sensor. One reason for this is that the Bayer filter decreases the effective resolution of the sensor by about 30% or thereabouts so the onset of diffraction limitation appears later with higher Mpx count. Without going into more theory, that should be enough to tell you that there is more headway for increasing Mpx count for the narrow lenses, and the f/2.8 lenses will benefit more.With more extremely resolving lenses becoming available now, i actually do think that difraction is a practically limiting factor.

Altough its true, that for most lenses stopping down the lens above the difraction limit will still improve sharpness.

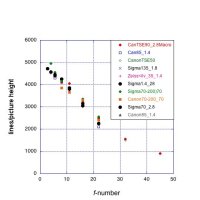

But if we take a theoretical "perfectly sharp" lens, then we actually are limited by difraction. The r5 with 45 has a difraction limit of about 7.1. We can see this already with the extremly sharp RF 135mm f1.8 here: Hover with your mouse over the picture to see f8 and take the mouse back away from the picture to see f5.6. f8 is noticably softer, less contrasty.

Canon RF 135mm F1.8 L IS USM Lens Image Quality

View the image quality delivered by the Canon RF 135mm F1.8 L IS USM Lens using ISO 12233 Resolution Chart lab test results. Compare the image quality of this lens with other lenses.www.the-digital-picture.com

So while difraction might not be relevant right now, because the lenses arent perfectly resoling the sensors, the 45 mp cameras of today are absolutly theoretically limited by lenses like the 100-500 f7.1, 200-800 f9 and 600 and 800 f11. It just isnt apparent because the lenses arent perfectly resolving the sensors, so stopping down still improves the image more, than diffraction degrades it.

This might mean, that there is not much reason to increase the megapixel count with the current market trend of preference for small and lightweight lenses, that need small aperatures in order to achieve this form factor. I wouldnt be suprised if the r5 ii stays in the 45 mp range. Same for the sony r range, i dont think it will go much beyond 61mp.

This leads me to question, where camera makers will head next to? We already have really good ergonomic camera bodies. We have more than enough frames per second for most applications, we have a practically good amount of megapixels. I think the r5 ii will be a really big tell for where we are heading with the camera industry, as i think we wont see much more meaningful improvement for most people. I hope it will be a great camera, but im unsure what could meaningfully be improved, except for making it a stacked sensor, maybe a bit more dynamic range.

Upvote

0