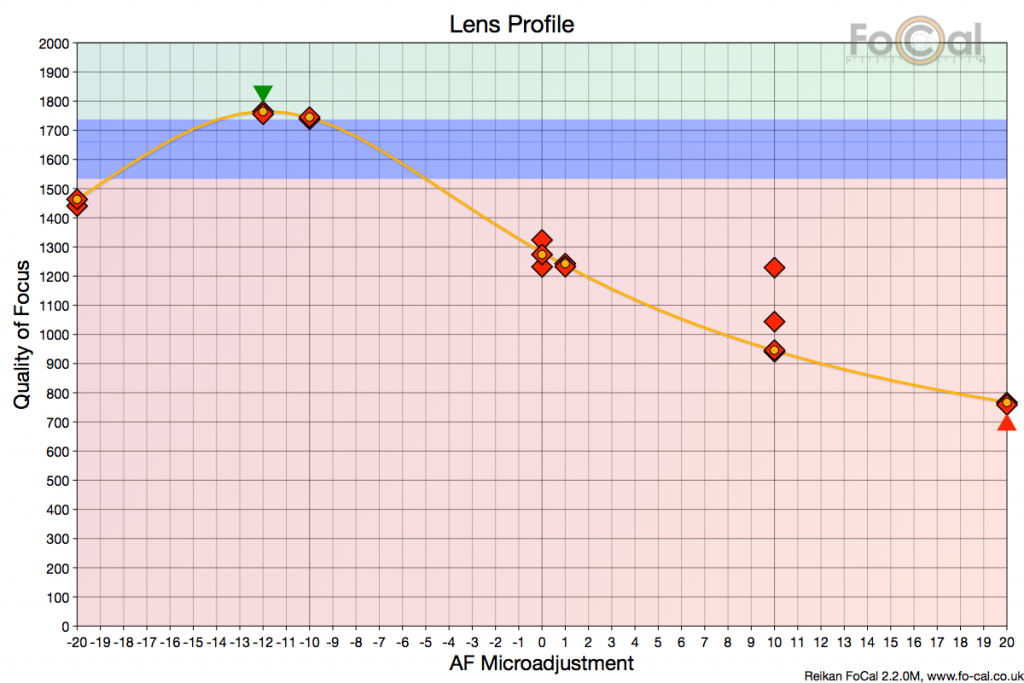

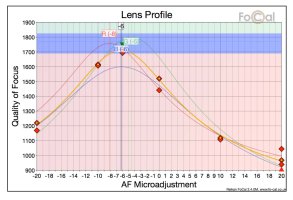

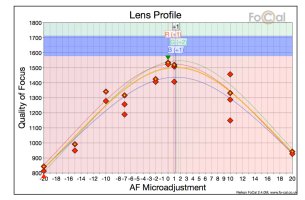

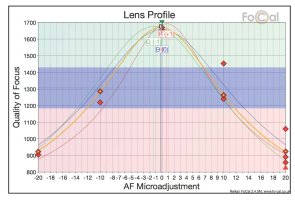

Reikan Focal collects the results automatically from the huge number of calibrations we do, and we can compare our "Quality of Focus (QoF)" data with the range of values found by other users.

http://www.reikan.co.uk/focalweb/index.php/2016/08/focal-2-2-add-full-canon-80d-and-1dx-mark-ii-more-comparison-data-and-internal-improvements/

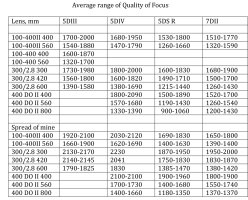

It's a very useful guide. to the performance of our copies of lenses with the rest out there and how different bodies react to different lenses. I think the QoF is a measure of the acutance of a lens, measuring the sharpness of a black-white transition. Here is a table for the telephotos I have used on various bodies over the years. The ranges given seem to fit in with the trends I find for my own lenses. Fortunately, my expensive primes are all above the average ranges. My 100-400mm II, which Lensrentals finds to be very consistent over many copies tends to be in the average ranges, which you would expect.

The comparative values are regularly updated as I can see for some lens-camera combinations that were not covered until very recently. FoCal is providing an independent database over many copies. It's quite a resource.

http://www.reikan.co.uk/focalweb/index.php/2016/08/focal-2-2-add-full-canon-80d-and-1dx-mark-ii-more-comparison-data-and-internal-improvements/

It's a very useful guide. to the performance of our copies of lenses with the rest out there and how different bodies react to different lenses. I think the QoF is a measure of the acutance of a lens, measuring the sharpness of a black-white transition. Here is a table for the telephotos I have used on various bodies over the years. The ranges given seem to fit in with the trends I find for my own lenses. Fortunately, my expensive primes are all above the average ranges. My 100-400mm II, which Lensrentals finds to be very consistent over many copies tends to be in the average ranges, which you would expect.

The comparative values are regularly updated as I can see for some lens-camera combinations that were not covered until very recently. FoCal is providing an independent database over many copies. It's quite a resource.