L

Loswr

Guest

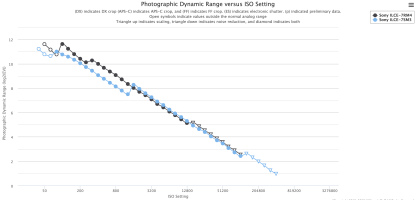

Just to drive the point home, compare the DR of the a7S III to the contemporary a7R IV (the a7R V has the same plot). Same size sensor, but one is 60 MP and the other is 12 MP and therefore has significantly larger pixels. By your 'large pixel' logic the a7S III should have lower noise and better DR. Except that...it doesn't. Even at high ISO (typically used in low light), it's no better than a sensor with much smaller pixels but having the same total area.Larger pixels capture more photons. This is why the Sony A7S3 has a 12MP sensor, to allow it to capture better low-light video.

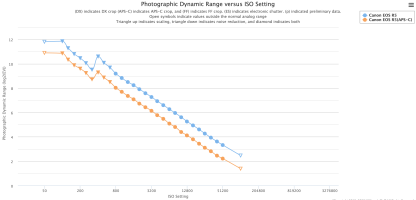

Now look at it from the other side. Here's the R5 in regular vs. crop mode, same size pixels (the exact same pixels, in fact), same sensor, but imaging with a smaller area of the sensor. If pixel size were the determinant, DR would be the same since the pixels are identical. Clearly, it's not. Using an APS-C area of the FF sensor costs you about a stop of DR. Less total light gathered means more image noise means less DR.

Upvote

0